Parallelizing Borůvka's Algorithm

(Final Writeup)

By Kathleen Fuh (kfuh) and Shreya Vemuri (shreyav)

Summary

For our project, we wrote a sequential version of Borůvka’s algorithm for finding minimum spanning trees in C++ and optimized its performance on a CPU using OpenMP and parallelizing techniques. In the process of parallelizing, we explored both edge and star contraction techniques for Borůvka’s algorithm. We then analyzed the performance on the GHC machines with various numbers of threads.

Background

A spanning tree of a connected, undirected graph G with vertex set V and edge set E is a connected subgraph of G that includes all vertices of G and exactly |V|-1 edges. When given a connected, weighted, undirected graph, the minimum spanning tree (MST) is a spanning tree with minimum total edge weight.

The key property that drives many MST algorithms is the light edge property. This property states that for any cut of a connected, undirected, weighted graph G, the edge with minimum weight that crosses the cut must be in the MST.

Borůvka’s algorithm is a well-known parallel algorithm for finding MSTs. It makes use of the light edge property by observing that the minimum weight edge incident on any vertex v must be in the MST (the cut of the graph in this case partitions the graph into one set containing the single vertex v and a second set containing the rest of the graph). This observation introduces an axis of parallelism along vertices because edges in the MST can be selected in parallel by looking at each vertex. Using this idea, Borůvka’s algorithm works as follows

While there are still edges:

- Find the minimum weight edge incident on each vertex (these edges will form at least one connected component)

- Contract each connected component, creating a new super vertex for all vertices in the component

- Add the minimum weight edge between super vertices to the MST and remove all other redundant edges.

There are two different techniques to approaching this algorithm when contracting the graph: edge contraction and star contraction:

- Edge contraction: Edge contraction involves finding the min edge incident to each vertex (in the first iteration) and each component thereafter. We then add these edges to our MST and perform the necessary contractions in the contraction stage. This utilizes the light-end property as was discussed above

- Star contraction: Star contraction involves flipping a coin for each vertex/ component and finding the heads and tails. This is necessary in star contraction, so we contract into the head and so that we can determine what are the star centers and star satellites. Particularly, we make 0 mean you are a satellite (false) and 1 mean you are a star center (true).

Here are some implementation details: Our algorithm takes in a graph structure as input and prints out the edges of the MST

Key data structures:

- Union find data structure: Our union find is based on having a struct which holds information about the parent and rank of each node. This allows us to store information about which component each node belongs to in order to determine which edges are valid edges to contract.

- Graph representation: We model the undirected graph with an underlying representation of a directed graph (so a directed edge is listed in the graph twice for the two directions). We based our representation off of the representation used in assignment 3. (Please see the Approach section for more information on how we decided on our graph representation).

Key operations:

- Find parent and union (read a paper which used compare and swap and that worked pretty well and allowed us to not have to lock up an entire section in order to call these functions)

- We didn’t use the rank attribute for the parallel version of Borůvka’s because that would require an atomic increment which we thought would result in some slowness

Excessive computation and dependency challenges:

- The algorithm is broken down into two main components: finding minimum edges out of each component and contracting the graph, each of which posed its own challenges throughout the process of parallelizing

- Finding min edges could be computationally expensive based on what axis of parallelism is necessary, especially since we were using the same graph on every iteration rather than actually creating a new contracted graph. We found that we were doing extra work here and had to change our approach as we explain in the next section

- The contraction stage also had dependencies based on the union-find operations that were done on both endpoints of the edge being contracted. This introduced a critical region that we had to fix. Our approach to account for this is also explained in the next section

Other challenges:

- Creating graphs (explained in the Approach section)

- Writing a lock-free union find data structure, so that we could decrease the granularity of our locking and critical sections

Approach

Technologies:

- We used the GHC machines (specifically using GHC 26 for our performance testing) and C++ for this project. We also used some Python for graph creation and correctness checks. In order parallelize our implementation on the CPU we used OpenMP primitives.

| Property | Spec Detail on GHC 26 |

| Architecture: | x86_64 |

| Thread(s) per core: | 2 |

| Core(s): | 6 |

Graph Representation:

Initial idea: We determined that we wanted some kind of adjacency list because an adjacency matrix for high vertex counts would be space inefficient (to add to the space, we also needed weights so we would not be able to just use a boolean matrix). Because we needed weights on the edges, we started by creating an Edge struct type that held a source, destination, and weight and then made an adjacency list such that each node had a linked list of all its edges. As we started working with this idea and implemented a sequential version of Borůvka’s with it, we realized that the linked list would not parallelize well and it would also require a lot of overhead in ensuring correctness if we continued with it for the parallel version.

Final idea: We modeled the undirected graph with an underlying representation of a directed graph (so a directed edge is listed in the graph twice for the two directions). We based our representation off of the representation used in assignment 3. Vertices were numbered from 0 to n-1 where n is the number of vertices. We used three arrays, one for offsets, one for destination vertices, and one for weights. The offsets array allowed us to index into the destinations and weights arrays (offset[i] gave us where vertex i’s out-edges started).

Note: The final graph representation, although easy to visualize, does not lend itself well to updating the graph itself. In 15-210 when we implemented Borůvka’s algorithm we could easily create a new graph by simply creating new vertex and edge sets every time we contracted the graph. Because of the calculation of offsets which would require sorting (and also require roughly twice the graphs size in space as we essentially make a copy of the graph), we decided we would keep the original graph and always operate on the original graph. We used a union-find data structure to handle the task of finding the component a vertex belonged to. The inability to update the graph as it contracts was a source of challenges as we will mention in the description of our many attempts at optimizations as detailed below.

Graph Creation:

We had wanted to use large graphs created on SNAP, but after working with this and the assignment 3 code, we realized the undirectedness and weights of our graph made translation from assignment 3’s conversion code to our representation difficult. Because of that, we decided on creating our own graphs using pseudorandomness for generating edges and weights. Because our graph representation required sorting edges by source node in order to figure out offsets and C++ is not the easiest language to use for such a purpose, we determined our graph structure and values with Python, did all the necessary edge generation and sorting, wrote it out to a text file, and had C++ create the actual graphs using the file information.

For initial testing we made small graphs of about 20 to 30 nodes and 100 to 1000 edges. Because of the pseudorandomness there was no guarantee that the graphs would be connected, so we had to update our code to handle cases of disconnected graphs. Once we had the sequential version working (we tested sequential correctness by hand for smaller graphs), we used it as a correctness checker for our parallel versions. For correctness checking we removed the randomness for edge weights to ensure all edges had distinct weights (because there is guaranteed to be one correct MST if all edges are distinct). The validation program for checking correctness was also written in Python.

Note that we chose to use Python for the graph creation and algorithm validation because these two tasks are just initial and ending procedures that are not part of the speedup we were trying to achieve for Boruvka's algorithm.

Sequential Implementation:

The sequential version does the following:

- Looks through all the edges

- Finds the representative vertices of the source and destination (if they are in the same component - i.e. have the same representative vertex - then we move onto the next edge because this one has already been contracted. Otherwise save it as that component’s minimum weight edge if it is the lightest edge seen so far)

- Once that is complete for all components, use edge contraction to contract the selected edges

This process continues until either the number of components is 1 (this is the case for a connected graph), or until the number of components does not change after an iteration (we know the number of components must decrease by at least one while there are edges to contract)

Parallel Implementations:

Initial attempt: We started by taking the sequential version as it was written and adding parallel for loops. This introduced the need for two critical regions. The first critical region was in the finding minimum edges phase because we had to find the minimum weighted edge which is hard when there are multiple threads reading and writing to the same min address (we needed to implement something like CAS in order to be consistent). The second critical region came in the contraction phase. Because our loop condition relied on the number of remaining components which would have to be decremented after every edge was contracted and the inserts in the mst_edges array relied on incrementing an index variable which needed to be modified by only one thread at a time.

Note: Although the following 2 versions did not yield any speedup (they actually performed much worse than our baseline sequential version), working through all the attempts helped us learn more about the structure of Borůvka’s algorithm and important concepts which ultimately helped us pinpoint smaller issues that helped with later parallel versions

What we learned as we were writing the 2 versions below:

- Did not need to loop through num_components. Instead just have a can_be_contracted boolean. The idea is that if there is an edge to be contracted in the current iteration, the part of the algorithm that finds minimum edges out of each component will identify that edge. That means in our parallel algorithm’s contraction phase, if any thread gets past the check of an edge not being in the same component, we know we have an edge to be contracted. This is especially useful in star contraction. The sequential algorithm kept track of the number of components from the previous iteration compared to the number of the current iteration. If that number had not changed between iterations we would be done. We realized this would have break for star contraction because star contraction depends on coin flips, and if you just so happened to get a distribution of coin flips that did not allow for any edges to be contracted on that iteration then the check against previous number of components would break early and the MST would not be complete

- Did not need to have an mst_edges_index. We realized that if we could determine which vertex of an edge was contracted into which one, we could always put the edge into the vertex that was contracted. The idea behind this is that once a component has been contracted into another component, it will not be contracted again, so we can always put the edge in indices of contracted vertices without worrying about them being overwritten. We rewrote the union find for the edge contraction so that we always contracted the smaller vertex id into the larger vertex id. In star contraction, because we are using random coin flips and we do not have a say in whether the smaller vertex id is the one that gets contracted we actually need a mst_edges array of size n so that the vertex that does get contracted can write directly into its spot. By doing this, we just have to do a little extra work at the end of finding the n-1 edges that are in the MST (this step is not difficult because we have initialized all edges in the mst_edges array to be dummy edges with src 0 dest 0, and we know if a vertex is never contracted into another one, then it will never write to that spot, so we just throw out all the edges that are “dummy edges”). We already had to do this anyways since we handle the case of disconnected graphs where there would be fewer than n-1 edges in the MST. Note that we also guarantee that there will not be a race with this insertion technique because in star contraction we check using compare and swaps to ensure that one vertex is not being simultaneously contracted by multiple other vertices.

Find Minimum Edges by Component with Edge Contraction: To get rid of the first critical region, we tried to parallelize over the vertices. Essentially we wanted to have each vertex that was a representative vertex check all the other vertices for those in the same component. If a vertex was found to be in the same component, they would then iterate through the edges belonging to that vertex looking for the minimum edge. The critical region was not needed in this case because we actually parallelize over the component. We started testing this on a 5000 node graph with 94,300 edges, but it was slower than the sequential.

Find Minimum Edges by Component with Star Contraction: Although this implementation ended up being faster than the edge contraction version on the 5000 node graph, it was still too slow. We created a 1,000,000 node graph with 9,000,000 edges and this version could not complete even after running for over 2 minutes. We determined that trying to mimic the idea of parallelizing finding min_edges over components was not the best idea as we were doing too much unnecessary work. For each iteration of finding minimum edges, we were essentially doing O(n^2) work by having each representative vertex look through all the vertices. A simple example of where this is bad would be in the first iteration, when every node is its own representative vertex. It should only have to look at its own edges and be done, but instead it has to loop through all 999999 other nodes and call find_parallel on them to make sure they’re not in the same component.

Note (regarding the two versions of the algorithm below where we find minimum edges out of each component by looping over the edges): when given source and destination vertices for an edge, we only update the component the source is in, even though we could check the destination too. We make this choice in order to avoid deadlock since we are using fine-grained locks. If we lock on component 1 and then component 2 for the source and destination, if another edge is being checked at the same time but its component 1 and component 2 just happen to be flipped (which can happen given our underlying directed representation of an undirected graph), there would be deadlock.

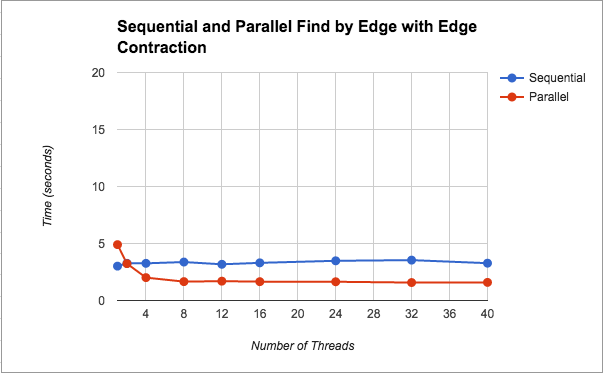

Find Minimum Edges by Edge with Edge Contraction: Having been unsuccessful with our finding minimum edges by vertex idea, we went back to our first parallel version taken from the baseline sequential code with the original two critical sections. We realized that fine-grained locks would help solve our problem. That way instead of locking the entire min_edges array we could just lock by component. We kept everything else the same. This yielded much better results, which are graphed (Figure 1) and discussed in the Results section.

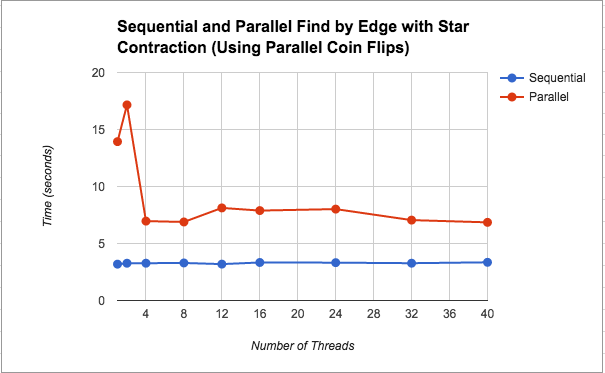

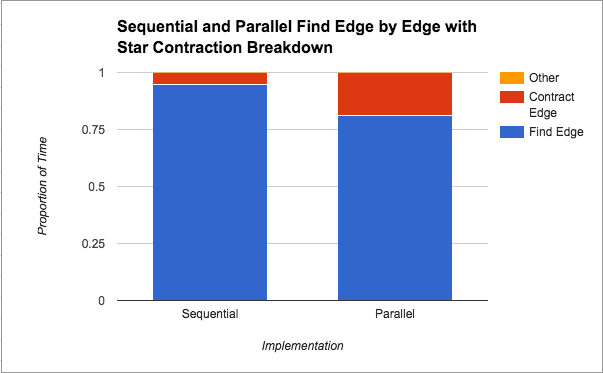

Find Minimum Edges by Edge with Star Contraction: We wanted to further speed up the “Find Minimum Edges by Edge with Edge Contraction” version, by parallelizing the contraction step using star contraction. This attempt actually slowed down significantly to the point that it was about 2.5x slower than the sequential version on average. We discuss our thoughts about this slowdown in the Results section under this implementation's performance graph (Figure 2). Additionally, Figure 4 shows a breakdown of where in the algorithm the most time is spent for this implementation. Please see below for a further discussion on this.

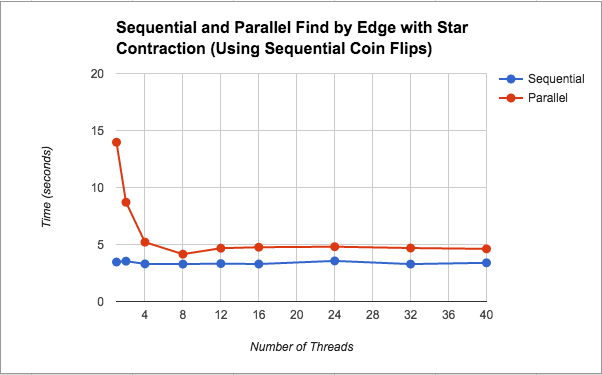

Because this version slowed down significantly, we experimented with removing some parallel sections in case they were causing too much overhead. Since we did not know much about how rand() is implemented under the hood, we took out the parallelization of the coin flips in case something about the implementation was hurting our performance. This change actually caused our parallel star contraction version to speed up. Although the parallel implementation was still slower than the sequential version it was only about 1.3x slower on average. Further discussion about this change and its performance is included in the discussion section under its graph (Figure 3). Additionally, Figure 5 shows a breakdown of where in the algorithm the most time is spent for this implementation. Please see below for a further discussion on this.

Please note: because parallelizing on the CPU with OpenMP presented us with more challenges than we anticipated (as explained above), we were not able to get to the CUDA implementation nor try image segmentation. We thought that focusing on the CPU implementation allowed to understand more concepts and application of what we learned in the class. We also some some cool speedups which was nice. If we didn’t we were able to understand where the limitations, dependencies, and computational problems came from.

Results

Given that we are running on machine with 6 cores and 2 execution contexts per core, we know that we have 12 execution contexts. We expected to get performance benefits and a speedup up until 12 threads. After 12 threads though, we expected either worse speedup or very little improvement; we still decided to run it just to see what would happen to performance due to creation and scheduling overhead.

In order to collect results, we using wall clock timing with the CycleTimer module as in past assignments to determine performance of our algorithm on our large graph. It is important to note that we did not include time taken to create our graphs because this is independent of our algorithm’s implementation.

Runtimes: We decided to collect results from running our algorithm on the largest graph because this would be where the effect of parallelism is most noticeable. This graph had 1,000,000 nodes and 9,000,000 edges (note that for preliminary testing we ran the algorithm on smaller graphs, but the significant results displayed below are from the large graph). We ran the algorithm on thread counts of 1, 2, 4, 8, 12, 16, 24, 32, and 40. Below we show our results visually. A common trend is that the performance plateaus after a certain thread count. We think that the graph plateaus due to the overhead in thread creation. Having a lot of threads starts off pretty useful, but as you contract there will be fewer components and thus less meaningful work to do so threads use up processor resources unnecessarily. (Our notion of meaningful goes back to the idea that we are always looping over the original graph, so as more of it is contracted, the algorithm still has to loop through the contracted edges and check that they are indeed in the same component before moving on to another edge.)

In Figure 1, we see that we get about a 2x speedup from increasing the number of threads. We also notice that after 8 threads, performance plateaus. We think that the performance flattens even with more threads because as the graph contracts there is less meaningful work. As mentioned before, by this we mean that, we are looping on the original graph on every iteration (i.e. we are not creating a new edge and vertex set with a smaller graph), so threads are still have to loop over edges that have been contracted, and the scheduling overhead may not be worth the help from extra threads. This parallel version using find by edge saw speed up because for finding edges, we were not doing unncessary work as we had done in the previous versions of finding by components. We additionally introduced the idea of fine grained locking, so that we are not locking the entire data structure.

As we see in Figures 2 and 3, using star contraction actually caused the parallel version to become slower than the parallel version by edge contraction. We think this is due to the overhead of spawning threads, scheduling them, and then possibly having to execute more iterations of the while loop since star contraction is not guaranteed to contract all the edges at once (from an asymptotic analysis perspective, the math works out so that the edges number of edges left to be contracted decreases by a constant factor, but the actual performance on the machine may be worse). We think that the flunctuations in the graph could be due to the randomness of the contraction.

As seen in Figure 3, after removing the parallel coin flips (i.e. calls to the C++ rand() function) we saw better speedup for this parallel version (although still not as good as the sequential version). As for the issue of the parallel version being slower, we think the discussion under Figure 2 still applies here. Regarding the bit of speedup from changing the coin flips, we did not find very much information about the rand() implementation despite our research. Some people mentioned it was a slow function, but even after we implemented our own pseudorandom number generator using xorshift, the performance did not improve. Based on our own thoughts, we believe the slowdown could be due to the fact that it is a pseudorandom number generator that may have serial dependencies on the previous number generated, and so parallelizing was just adding extra overhead.

Thoughts and exploration of slow down: We think the slowdown in the star contraction implementations is probably due to there being more iterations of the algorithm in this version. This hypothesis is confirmed when we added a counter for the number of iterations the implementations were taking. On the large graph, the sequential version finished in 8 iterations. After running the star contraction version a few times, we found that it took between 26 and 28 iterations on average.

Something interesting about all our performance graphs above is that on one thread the parallel version is slower than the sequential version. This was unexpected as one would think that running on one thread should have the same runtime as the sequential version. Even after testing and changing our parallel code so that it was as similar to the sequential code as we could get, the slowdown still exists, and we are currently unsure what is causing it (but it is something we will continue to look into).

Please note: we were not able to collect results for the other 2 implementations because the runtime was way too slow to complete for the large graph.

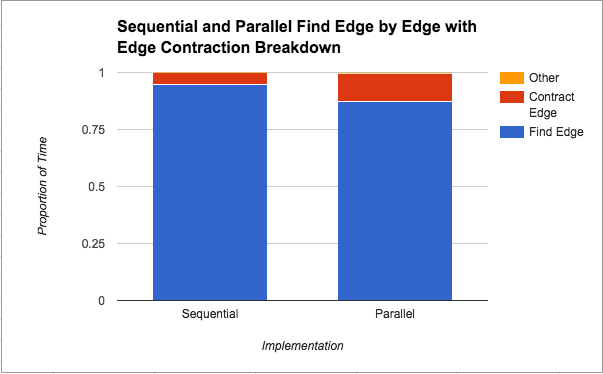

Algorithm Breakdown: Below we analyze the breakdown of work performed by our algorithm into three categories: finding edges, contracting edges, and other work (this includes initializing variables and freeing variables).We run the program on 8 threads to generate the data that is plotted below because we saw from the graphs above that the best performance came at 8 threads for all implementations.:

In Figure 4 above, we see that finding the minimum edges step takes the most amount of time from the total time, although that proportion of time decreases in the parallel version. Since edges are being contracted in the same manner (both implementations use edge contraction), this suggests that parallelizing the finding of the minimum weight edge out of each component does in fact help. Unfortunately, there is a limit to how much that parallelization can help as we saw from Figure 1 that the speedup plateaus at about 2x. We attribute this limitation to the locking that needs to be done on the data structure by nature of the “taking a minimum” operation.

As seen in Figure 5, compared to the sequential version, star contraction seems to spend almost triple the proportion of time contracting edges. We can attribute this back to our discussion under Figure 2, where we saw that there were many more iterations of traversing the graph from star contraction. Given that the graphs in Figures 4 and 5 are based on implementations that use the same finding minimum edges technique, we get a sense for how those extra iterations of the algorithm hurt our performance, since in wall-clock time the finding edges should take about the same time, but proportionally, more time has to be devoted to contracting edges.

We notice from both Figures 4 and 5 that the algorithm spends the majority of its time looking for edges to contract. We think that this is probably due to the fact that we have a lot more edges than vertices and for the contraction stage we are only looping through the number of vertices whereas when looking for edges to contract we always have to loop through the number of edges.

References

- 15-210’s notes

- 15-418 Assignment 3

- Paper for lock-free union find

List of Work By Each Student

Equal work was performed by both project members.